This is part 4 in a series of posts about writing service brokers in .NET Core. All posts in the series:

- Marketplace and service provisioning

- Service binding

- Provisioning an Azure Storage account

- Binding an Azure Storage account (this post)

In the previous post we implemented provisioning and deprovisioning of an Azure Storage account. Because this was already quite a long post, we skipped the binding and unbinding part for the post you're reading now.

All source code for this blog post series can be found here.

Azure Storage account authorization

When we bind an application to an Azure Storage account, we must provide the application with the means to authorize against the account.

There are a few ways to authorize for Azure Storage:

- Azure Active Directory: client application requests an access token from Azure AD and uses this token to access the Azure Storage account. This is supported only for blob and queue storage.

- Shared Key.

- Shared Access Signatures (SAS): a URI that grants access to Azure Storage resources for a specific amount of time.

- Anonymous access: anonymous access can be enabled at the storage container or blob level.

SAS tokens will not work for a service broker because they are valid for a limited amount of time. And since it's not a lot of fun to write a binding implementation for anonymous access we'll skip that as well.

That leaves us with shared keys and Azure AD. Since Azure AD authorization for Azure Storage is in beta, I guess that would be a nice challenge 😊 And of course it is still possible to provide the shared key as well so client applications can choose between Azure AD and shared keys as their means of authorization.

What are we building?

When we bind an Azure Storage account we need to provide the application that we bind to with all the information that is necessary to access the storage account. So what information does a client application need?

First we need the storage account urls. These are urls of the form <account>.blob.core.windows.net, <account>.queue.core.windows.net, <account>.table.core.windows.net and <account>.file.core.windows.net.

Next is the means to authorize. The client application that we bind to should be able to use the OAuth 2.0 client credentials grant flow so we need a client id, a client secret, a token endpoint and the scopes (permissions) to authorize for. This means that when we bind, we must

- create an Azure AD application,

- generate a client secret for the AD application and

- assign the AD application principal to the storage account in a role that gives the right set of permissions.

The client application needs to receive all the necessary information to be able to start an OAuth 2.0 client credentials flow.

Besides, we also would like to provide the shared keys for the storage account so the client application can choose how to authenticate: via Azure AD or via a shared key.

Additional permissions for the service broker

The service broker needs some additional permissions besides those from the custom role we defined in the previous post. It should now also be able to create Azure AD applications and assign these to an Azure Storage role.

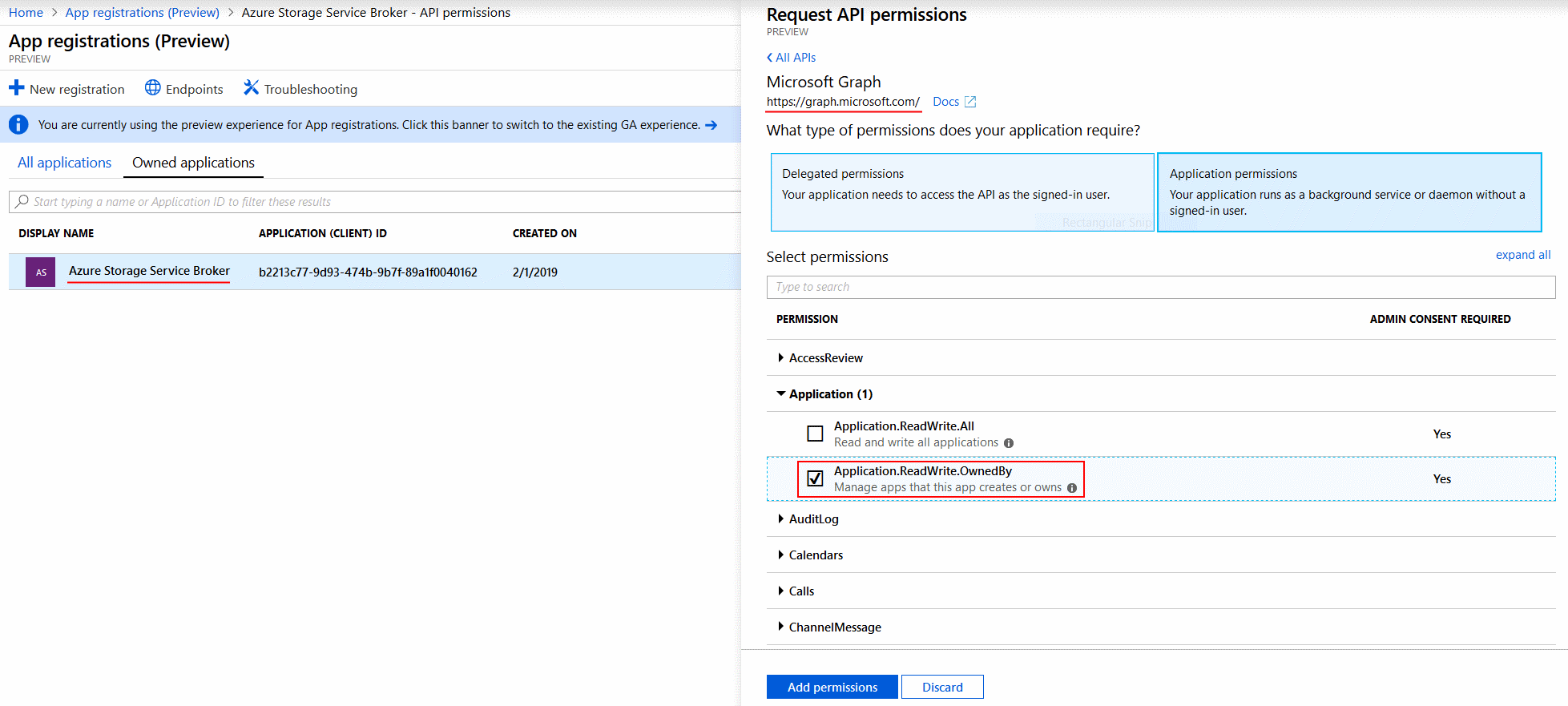

This means we need to assign Microsoft Graph API permissions to the Azure Storage Service Broker AD application:

We assign the Application.ReadWrite.OwnedBy permission so the service broker should be able to manage AD apps that it is the owner of.

And because we perform the additional action of assigning a service principal to an Azure Storage role, we also need to extend the service broker role definition with one extra permission: Microsoft.Authorization/roleAssignments/write:

loading...

The end result

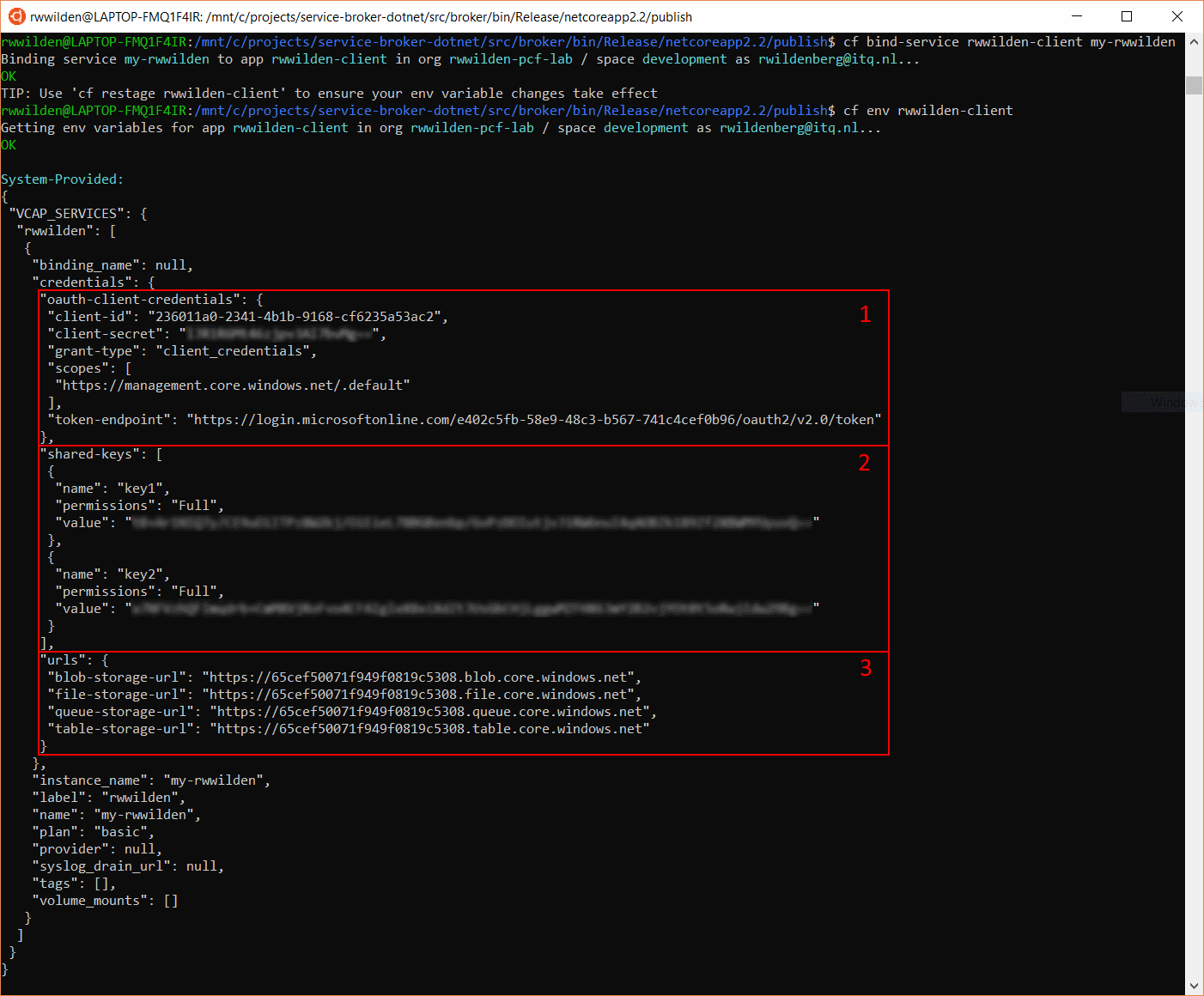

To put things in context, the screenshot below shows the result of a bind operation against the my-rwwilden service.

First we bind the rwwilden-client app to the my-rwwilden service, which is a service instance created by the rwwilden-broker service broker. When provisioning this instance we created an Azure Resource Group and an Azure Storage account (check the previous post for more details).

Next we get the environment settings for the rwwilden-client application and it now has a set of credentials in the VCAP_SERVICES environment variables. In the first block we have the settings that allow the rwwilden-client application to get an OAuth2.0 access token that authorizes requests to the Azure Storage API. The second block has the shared keys that provide another way to authorize to Azure Storage. And in the third block we see the API endpoints for accessing all storage services.

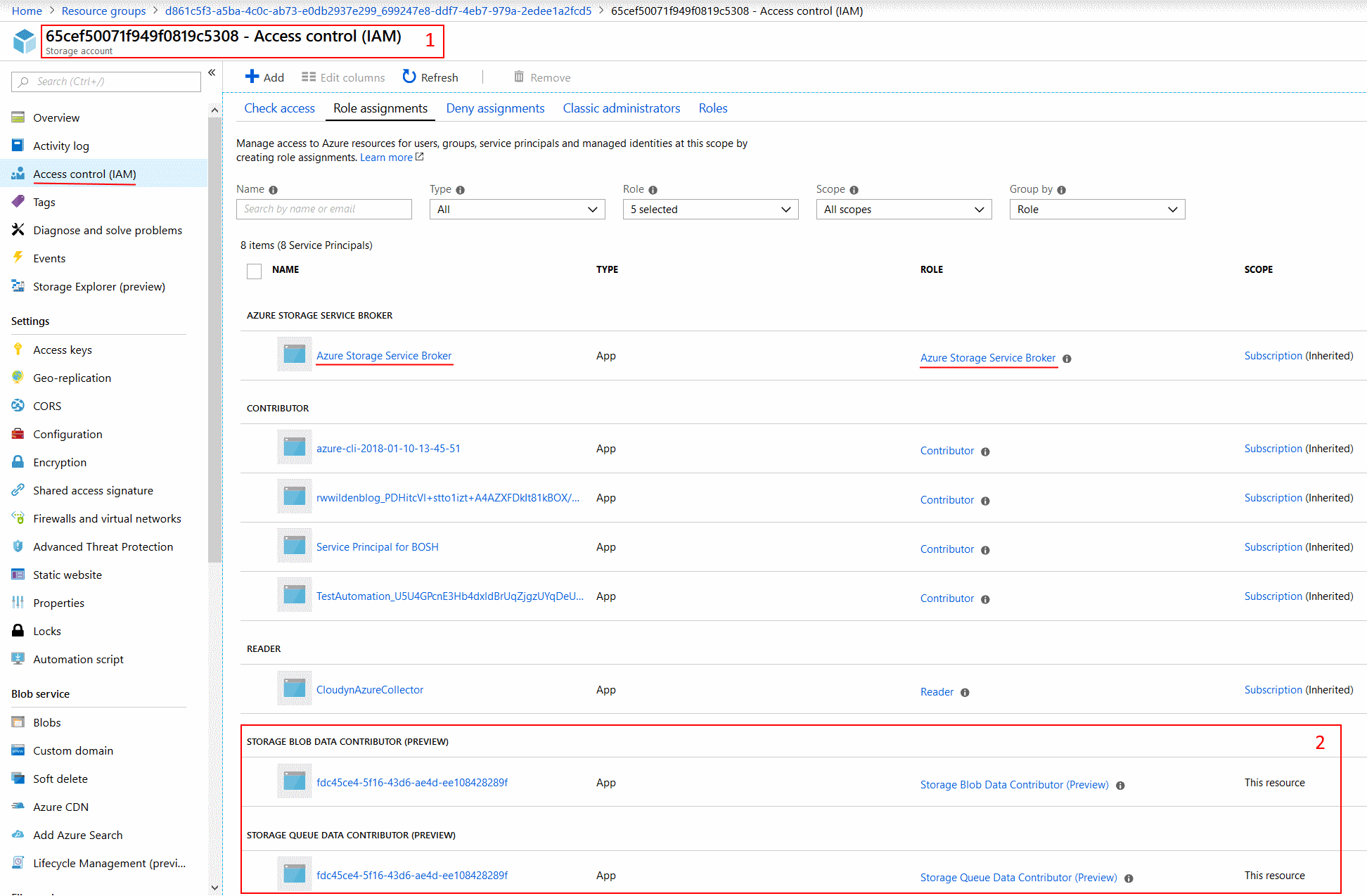

Let's see what this looks like in Azure. Remember, we created an Azure AD app and service principal specifically for the current binding. The service principal is assigned to two roles: Storage Blob Data Contributor (Preview) and Storage Queue Data Contributor (Preview). Let's see whether the principal that was created is assigned to these two roles:

At the top in box 1 you see that we are looking at a storage account named 65cef50071f949f0819c5308, the same account name we see appearing in the storage urls (e.g.: https://65cef50071f949f0819c5308.blob.core.windows.net). At the bottom in box 2 you can see that a service principal named fdc45ce4-5f16-43d6-ae4d-ee108428289f is assigned to the two roles. The service principal name happens to be the name of the binding that was provided to the service broker when binding the service.

Details

For the current version of the broker, I added all code directly to the BindAsync method of the ServiceBindingBlocking class, creating a rather large method that does everything. In the next version of the broker I will switch to an asynchronous implementation and take the opportunity to clean things up.

But for now, we'll just take a look at what's happening inside the BindAsync method. First, we retrieve all storage accounts from the Azure subscription that have a tag that matches the service instance id:

loading...

This is also a fine opportunity to check if the bind request is correct by verifying that there actually exists a storage account with the service instance id tag.

Next we create the Azure AD application that corresponds to this binding. Note that we give it a display name and identifier URI that matches the binding id (lines 5/6):

loading...

Next step is to create the service principal that corresponds to the AD application:

loading...

And assign this principal to two predefined Azure storage roles with predefined ids:

loading...

Because we want to give our client application some options to choose from when accessing the storage account, we also get the access keys to return in the credentials object:

loading...

We finally have all the necessary information to build our credentials object that will be added to the VCAP_SERVICES environment variable of the client application that we bind to:

loading...

Conclusion

The last two posts had less to do with service brokers and more with Azure. However, you only run into real issues with implementing service brokers when you provision and bind real services. One issue I already anticipated is that provisioning and binding services may take time. So instead of doing this in a blocking way, we may want to leverage the asynchronous support that the OSBAPI offers.

Another thing that's important is doing everything you can to keep your service broker stateless. This essentially means that you must encode the information that Cloud Foundry provides inside your backend system. For example, when binding we receive a binding id from PCF. We use this binding id as the name for an Azure AD application. When we unbind, we get the same binding id from PCF so we can locate the Azure AD app and delete it. This may not be possible in every backend system which means we have to keep track somewhere how Cloud Foundry identifiers (service instance and binding ids) map to backend concepts.

In the next post we will implement asynchronous service provisioning and polling to better handle long-running operations.